You must have faced these ChatGPT issues in your life.

These all happen because of limited context windows in Large Language Models (LLMs). Every bracket, space, and key-value pair in JSON consumes valuable tokens. When data grows — models choke.

To fix this, we need a way to handle structured data in an LLM-optimized format — that’s where TOON comes in.

TOON (Token-Oriented Object Notation) is a lightweight data representation format designed for LLMs like ChatGPT, Gemini, or Claude. Unlike JSON or YAML, TOON reduces token usage by minimizing syntax redundancy while maintaining structure readability.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

// JSON Format

{

"user": {

"name": "Zeeshan Ali",

"role": "Engineer",

"skills": ["AI", "LLMs", "Cloud"]

}

}

// TOON Format

user:

name: Zeeshan Ali

role: Engineer

skills: items[3]: "AI","LLMs","Cloud"

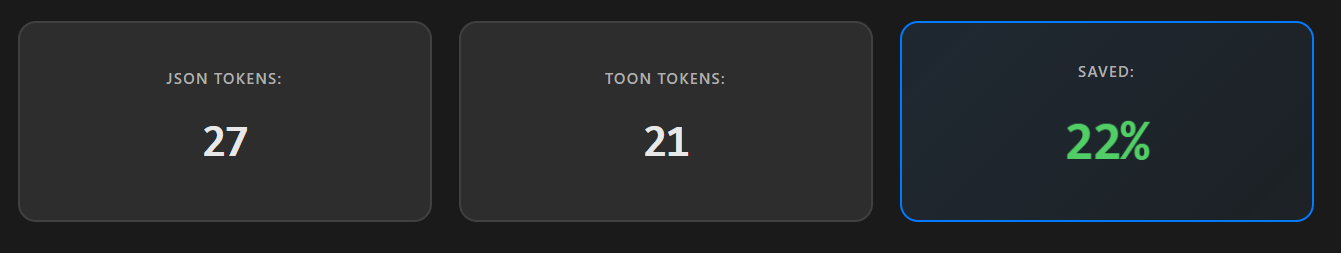

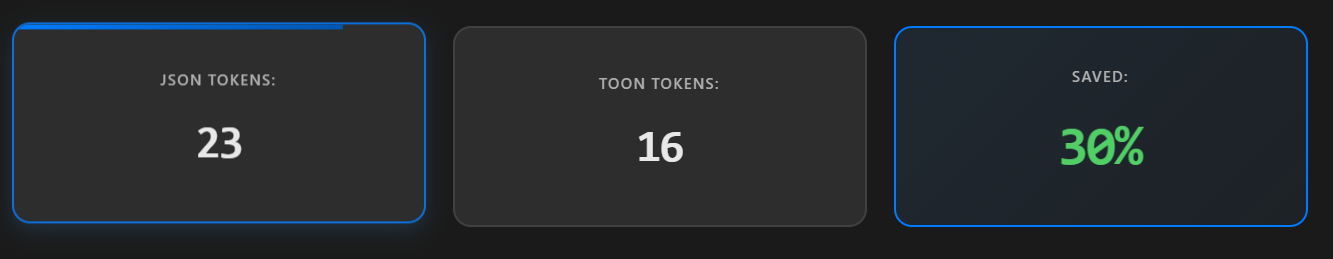

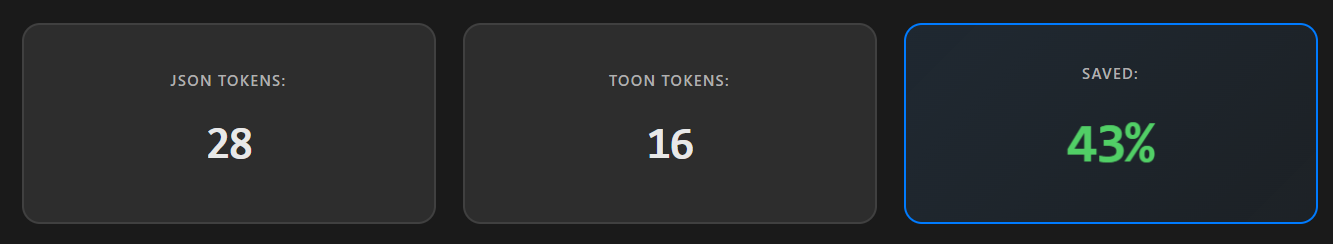

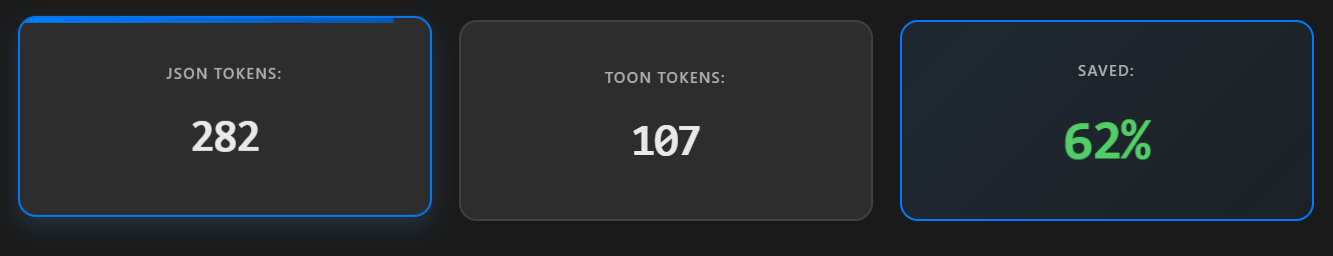

Notice how TOON removes unnecessary punctuation while keeping data structured and readable. Every saved symbol means fewer tokens — and fewer tokens mean more context room for reasoning and generation.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

// JSON Example — Nested Configuration

{

"app": {

"theme": { "mode": "dark", "font": "Inter" },

"version": "1.4.2"

}

}

// TOON Equivalent

app:

theme:

mode: dark

font: Inter

version: 1.4.2

1

2

3

4

5

6

7

8

9

10

11

12

// JSON Example — Array of Objects

{

"employees": [

{ "name": "Ali", "department": "AI" },

{ "name": "Sara", "department": "Web" }

]

}

// TOON Equivalent

employees: items[2]{name, department}:

Ali, AI

Sara, Web

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

{

"company": {

"name": "P2PClouds",

"founded": 2018,

"departments": [

{

"name": "AI Research",

"lead": "Zeeshan Ali",

"employees": [

{ "name": "Sara", "role": "Data Scientist", "skills": ["Python", "TensorFlow", "LLMs"] },

{ "name": "Ahmed", "role": "ML Engineer", "skills": ["PyTorch", "NLP"] }

],

"projects": [

{ "title": "TOON Format", "status": "Active", "metrics": { "efficiency": "40% gain", "adoption": "Beta" } },

{ "title": "Toxicity Detection", "status": "Paused", "metrics": { "accuracy": "92%", "recall": "88%" } }

]

},

{

"name": "Web Development",

"lead": "Ayesha Khan",

"employees": [

{ "name": "Ali", "role": "Frontend Developer", "skills": ["React", "Next.js"] },

{ "name": "Bilal", "role": "Backend Developer", "skills": ["Node.js", "MongoDB"] }

]

}

],

"locations": ["Pakistan", "UAE", "USA"],

"products": {

"active": ["AI Agent", "PromptHub", "CloudSync"],

"upcoming": ["DataVerse", "SmartLLM"]

}

}

}

company:

name: P2PClouds

founded: 2018

departments: items[2]{name, lead, employees, projects}:

AI Research, Zeeshan Ali,

employees: items[2]{name, role, skills}:

Sara, Data Scientist, [Python, TensorFlow, LLMs]

Ahmed, ML Engineer, [PyTorch, NLP]

projects: items[2]{title, status, metrics}:

TOON Format, Active, metrics:{efficiency: 40% gain, adoption: Beta}

Toxicity Detection, Paused, metrics:{accuracy: 92%, recall: 88%}

Web Development, Ayesha Khan,

employees: items[2]{name, role, skills}:

Ali, Frontend Developer, [React, Next.js]

Bilal, Backend Developer, [Node.js, MongoDB]

locations: [Pakistan, UAE, USA]

products:

active: [AI Agent, PromptHub, CloudSync]

upcoming: [DataVerse, SmartLLM]

🚀 Benefits of TOON Format

Token-Efficient: Uses 30–40% fewer tokens compared to JSON, directly improving LLM throughput. Readable Yet Compact: Designed to be easily human-readable like YAML, but more LLM-friendly. Faster Processing: Less tokenization overhead means faster prompt processing and responses. Context Window Optimization: Ideal for multi-turn chat or long context applications. Cross-Compatible: Easily convertible from JSON or YAML for smooth integration.

🧠 Applications of TOON

Prompt Engineering – store and format system, user, and context data efficiently. Chatbot Memory Systems – manage multi-turn context without exceeding token limits. AI Agents – define roles, objectives, and tool schemas with minimal token burst. Configuration Files – similar to TOON/YAML, but optimized for token parsing in LLMs. Data Serialization in Edge AI – transmit structured data with reduced payload size.

🌍 Why TOON Matters in the LLM Era

As AI systems evolve, context efficiency becomes more critical than model size. TOON represents a shift toward data formats that think like LLMs — efficient, structured, and context-aware.

In short, TOON helps you talk to AI in its native language — concise, meaningful, and token-smart.

Authored by Zeeshan Ali — AI Engineer, Prompt Architect, and TOON Format Advocate.